Hello fellow applied linguists.

This page is the Appendix to my paper for the 2009 Temple University Applied Linguistics Colloquium and will describe the following resources.

- Antconc Concordancer

- Compleat Lexical Tutor

- David Lee’s Devoted to Corpora

AntConc Concordancer

To start, the one tool that I use for most of my analysis is Antconc Concordance program developed by Laurence Anthony of Waseda University in Tokyo, Japan.

This is a freeware program, which is extremely handy because it can be opened without installing it on your computer. You can simply keep it on your flash memory drive and use it on any computer. It can be run on Windows, Macintosh, and Linux as well.

Also it contains various tools as well as a concordancer. According to Professor Anthony’s description it contains the following:

- Concordance

- Concordance Plot

- File View

- Clusters

- N-Grams

- Collocates

- Word List

- Keyword list

I will only explain a few of these, but I recommend downloading the program and reading the explanation text that accompanies it.

The tool I use is the “word list” which takes the words in the corpus and places them in a ranked order based on the most frequent. In Parise’s Building and Investigating a Written Corpus of Japanese Junior High School Learners (2010), the word lists were produced by this tool.

The “concordance” function takes the words, like the ones that could be found in the word list, can be then traced back to its original context on a sentence level using this function. With the “concordance plot ” function we are able to see where the words and concordances occur within the text itself. This way we can get a sense of the distribution of the concordance. Is it generally used or in isolated sections?

Finally, “file view” allows the researcher to see the word or concordance in its “natural habitat” the original text in order to re-contextualize how it is used.

Here is the link to Laurence Anthony’s site for more information about this tool. http://www.antlab.sci.waseda.ac.jp/

Another tool that I strongly recommend which is probably already well known among applied and corpus linguistic circles is Tom Cobb’s “Compleat Lexical Tutor” an Internet site devoted to “data-driven learning”. The very nice aspect of this site is the fact that it provides tools not only for corpus building and research, but for teachers and students.

The most helpful tools on this site for my own teaching are the concordance and the vocabulary profile tools.

The concordance tool (highlighted in yellow) allow the user to search various corpora( Brown, BNC spoken and

written, US TV talk, Learner corpora, etc. ) the concordance allows you to look at instances of language in their native context, allowing the researcher, or student to access collocational information.

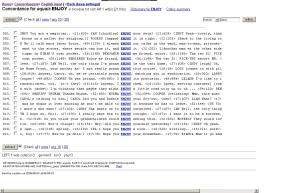

For example, if you want to see how the word enjoy is collocated in US English, you type the word and select US TV Talk. You also have the option of choosing to highlight the left or right of the word. Then push submit and then you have your concordances.

As for the vocabulary profiles, rather than reinvent the wheel, I have an excellent PDF by a fellow teacher, Jean-Pierre Richard who wrote about the vocabulary profile tool for his colleagues.

Please look here for the PDF: English in Action at Ichikawa

The final part of this guide is an introduction to a main resource for Corpus Linguistics, and this is David Lees’ Bookmarks for Corpus Based Linguists.

Lee offers excellent commentaries along with lists of corpora, collections, data archives, multilingual corpora and parallel-corpora, some of which are freely available to download, or for purchase. He also lists software tools, frequency lists, and ranks them according to user friendliness. Visitors will also find relevant references, journals, and papers-some of which are accessible as e-journals. There is a section for teaching as well. The downside, and Lee admits this in the opening page; is that the site needs maintenance some of the links may be dead-ends. Regardless, it is one of the best sites on the subject and a great start for those who want a general view of what is available

Reference list

Parise, P.(2010). Building and investigating a written corpus of junior high school learners. Richards, J-P. J.,Fushino, K. (eds) Temple University Japan Proceedings of the 2009 Applied Linguistics Colloquium, Tokyo:Temple University (pp.97-103)

Richard, J.P. (2007) Vocabprofile. English in Action in Ichikawa (1)2.

Fantastic, I hadn’t heard about this topic up to the present. Thx!

Thank you cicJolveStile,

Anytime! I hope you can make use of these tools especially if you are a teacher.

I’d like to add a link to PMSE – a programmatic environment for corpus processing: http://www.petamem.com/solutions/sector/academic/PMSE/

This tool consists of 3 functional blocks: text acquisition, data analysis and visualisation. It was designed for educational / research purposes. It is mainly statistically based, therefore you can analyze almost any language. (If you have text encoded in UTF-8).

Thank you.

Thank you jiri for the post!

At some point I intend to write a follow up to this article with an updated tools guide. The direction may go toward applied linguistics rather than natural language processing, but I would be open to suggestions.

The hardest thing to surmount is that teachers rarely embrace corpus tools due to their technical nature. In my view simple is better for teachers. I showed a group of teachers during a training session Wordle and they were enamored with it not only for the end-product but by the very fact that the process was easy.

Anyway, I invite more sugggestions like this one. Please let me know

Hello Peter,

almost a year later, I’d like to give a status update on Jiri post. The PetaMem Corpus Tool Homepage resides now under http://petamem.corpus.technology.

Cheers,